Unlocking the Power of AI in B2B and B2C Commerce

Artificial Intelligence(AI) is all the rage right now, with claims that it can do everything from reading your pet's mind to ending world hunger, and...

7 min read

Naresh Ram

:

Jul 29, 2023 9:22:00 AM

Naresh Ram

:

Jul 29, 2023 9:22:00 AM

Generative AI is in the midst of transforming the way we do business by cutting costs, improving margins, and bringing about unfathomable efficiency with its language, sound, and image prowess, like the Dory version of Hal.

The power of AI will help knowledge workers broadly synthesize information and then rapidly use it to create results. Results that will unlock productivity at a scale previously impossible to obtain. It will be like your merchandiser has a sidekick that works 24x7 to highlight errors, recommend categories, copy, etc.

At AAXIS, we have been working with Machine Learning and Artificial Intelligence (AI) for over 10 years and have focused on Generative AI for the last year. During this time, we have curated a set of tools and processes to achieve greater business value.

So, how can we enable our merchandisers and unmask the little tech gnome that can help them gain 10x productivity?

Foundational AI Models such as Google's PaLM Bison, OpenAI's GPT, Cohere, MidJourney, Stable Diffusion, and Dal-E-2 can be our merchandiser's superhero sidekicks, instrumental in tremendously boosting productivity. Picture this: instead of drowning in the dull depths of categorization, mind-numbing spelling and grammar correction, and boring meta-tag generation, we can be free to create captivating copy, curate jaw-dropping images, and focus on differentiating our business from the pack. With the linguistic and image-creation prowess of our friendly neighborhood Foundational Models, our merchandisers can focus on what matters most instead of getting bogged down with mundane tasks.

Better yet, we can offload the bulk of the work to automated tasks, run it nightly, and have the results ready for review first thing in the morning along with our cup of joe. Using Application Programming Interfaces (API), thousands of tasks can be performed every night. It’s like machines speaking to machines in their own language; APIs allow our tool to interact with third-party services efficiently while information is exchanged in JavaScript Object Notation (JSON) in order to parse and extract what it is looking for. These conversations can happen in parallel without the fear of getting mixed up. It’s like stockbrokers talking to each other on the trading floor. It may seem chaotic to the outsiders, but they get each other perfectly.

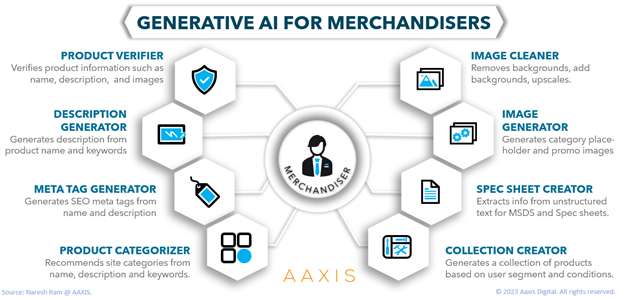

At AAXIS, we have built tools focused on specific use cases. We designed these tools like Lego bricks so they could be combined with other tools to automate catalog maintenance tasks.

Here are some of the more interesting bricks we’ve made:

Product Verifier can ensure that a product is in the right category, its description matches the name, its images are accurate, etc. By spotlighting suspect products, this app can help merchandisers scan and fix thousands of products quickly.

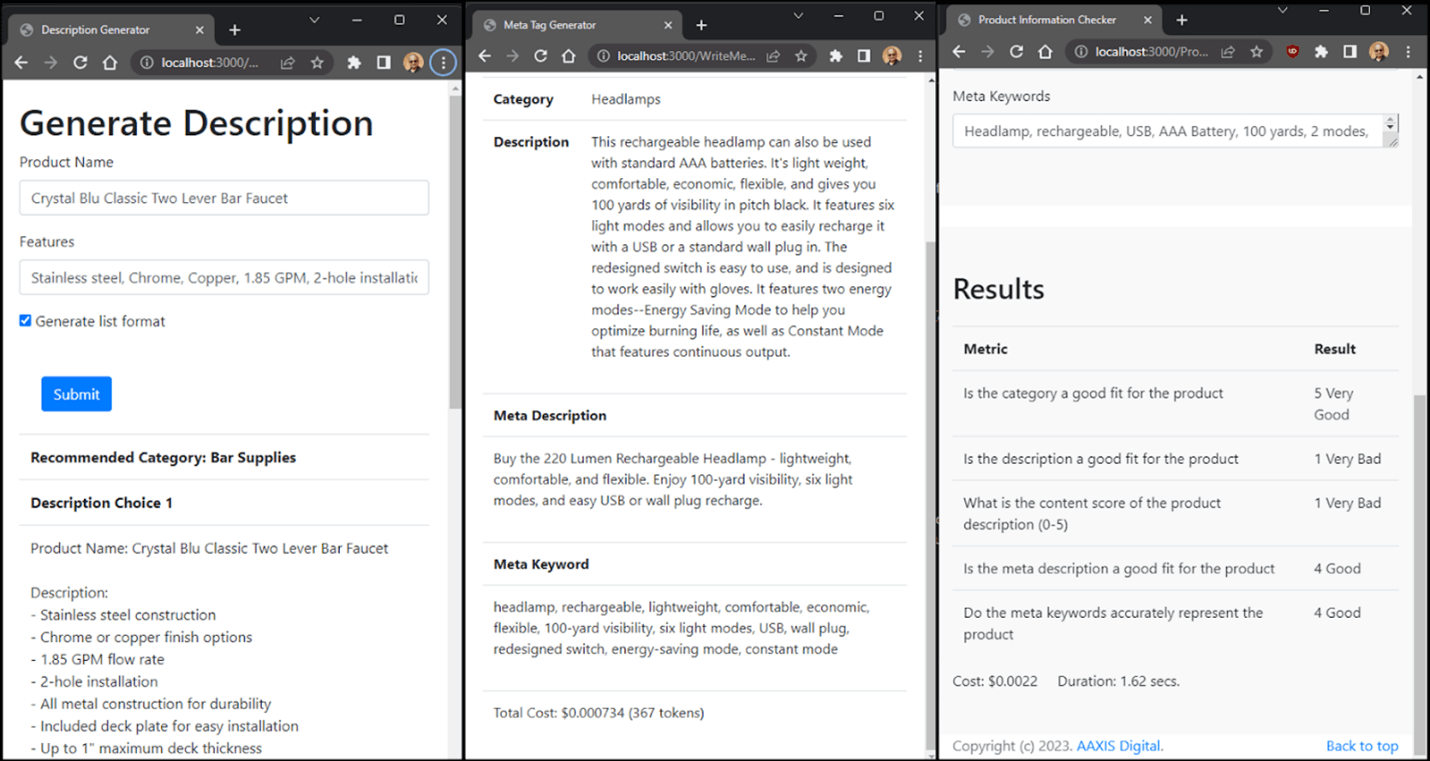

Description Generator can write a starter description for the merchandiser to review and correct as necessary. They can give a few keywords to get the process started. Generative AI will mimic the merchandiser's tone and language structure to ensure a consistent catalog.

Meta Tag Generator can scan the catalog and detect products whose meta tags can be improved. It then recommends new keywords and flags the products for approval by the Merchandiser. This will ensure that the product receives its due attention from search engines controlled by the lords of Google and the like.

Product Categorizer can look at the names and descriptions of incoming products and determine which category or categories best fit the product. Great for sites importing products from ERP systems with less than ideal taxonomy or for marketplaces. This will help your users to find the product easily, and they will buy that much more.

Image Cleaner can scan images, even user-generated content, and determine if the product is prominently placed in it. Image models can also automatically remove backgrounds and replace them with a standard product background making your site look and feel professional. This improves shopper confidence in the site and leads to increased conversion.

Image Generators like Mid Journey, Stable Diffusion, and Dal-E-2 can generate category placeholder images. I don’t believe product image generation for eCommerce websites is quite there yet, but these image models can generate good promotional content for automated personalized banner backgrounds. This allows the merchandiser/marketer to capitalize on the micro-moments in a customer's journey.

Product Collection Creator can curate a set of items to recommend to different users. I know this is recommendations, which typically uses collaborative filters, and any half-decent algorithm would make mince meat out of any foundation model. But what if you don’t have any collaboration data (cold start)? Large Language Models (LLM) can pick products from context. For example, LLMs can pick a collection of cosmetics based on their local weather. Think “LA Marathon on a hot sweltering day” collection. You try this on for size collaborative filtering! No? Didn’t think so.

MSDS and Warranty Sheet Generator can extract information from unstructured content and fill up a template to generate MSDS and Warranty sheets. The merchandiser can validate the information populated, avoiding unnecessary copy and paste. It can even highlight where it sourced the information so the merchandiser can validate it quickly.

All the above can be either implemented as a scheduled job or as on-demand widgets in the admin section. When implemented as a scheduled job, the changes should be grouped as a project and set to be manually verified by the merchandising team.

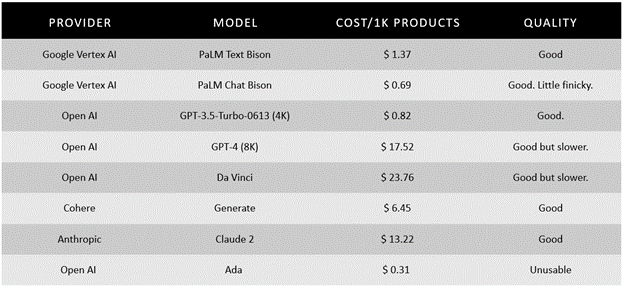

The cost will depend on the model selected and the product text. In general, most text-based tasks in our test required between $0.50 to $2 per 1,000 products.

This is fine and all, but are they any good?

Ideas are a dime a dozen (or a dime for a 100 if you use GPT), but which ones are actually usable in commercial settings? To test this, my colleague Prashant Mishra, who heads our Data and AI Division, and I, set out to test these with each provider and get a baseline of performance and cost. Note that in the case of some models, a lot depends on the crafting of the prompt. YMMV.

Each model was given the following as a sample:

Product Name: Steel Storage Shelves, 65 x 55 in.

Keywords: Practical, spacious, 4000-pound capacity, hammered granite finish, powder coating, tread-plate design, bold, welded steel construction, durable, adjustable shelves, four shelves.

Output: Add these practical and spacious shelves to your office storage collection. This shelving unit features welded steel construction for added durability and support, four adjustable shelves for versatile storage options, and a 4000-pound weight capacity. The hammered granite finish and tread-plate design create a bold look in your space, and the powder coating provides a layer of protection against wear and tear.

And we asked the model very nicely, via API, to generate an impactful web description for this one.

Product Name: Crystal Blu Classic Two Lever Bar Faucet

Keywords: Stainless steel, Chrome, Copper, 1.85 GPM, 2 hole installation, all metal, included deck place, upto 1" openings, 1/2" NPSM connection, Ceramic disc value, ADA compliant, Lead free, Residential and commercial, CEC Compliant

Here is a short comparison of the costs:

At least some of you might be thinking there must be a typo. So, let me be very clear. To use Google’s PaLM Text Bison model to generate 1,000 product descriptions, you will pay Google one dollar and 37 cents billed monthly. For 1.5 cents, you can generate and stick 10 product descriptions into a database in 10 seconds or less. There is some development work required, of course.

For a mere 0.066 cents (or $0.66 for 1,000 products), the PaLM Chat Bison model generated the following description:

The Crystal Blu Classic Two Lever Bar Faucet is a high-quality, durable faucet that is perfect for residential and commercial use. It is made of stainless steel, chrome, and copper, and features a ceramic disc valve for smooth operation and long-lasting performance. The faucet has a 1.85 GPM flow rate and a 2-hole installation. It also includes a deck plate and is compatible with up to 1" openings. The Crystal Blu Classic Two Lever Bar Faucet is ADA and CEC compliant, and is lead-free.

Not bad for a fraction of a cent, eh? Now for 2.4 cents (or $24 for 1K Products), Open AI’s DaVinci generated:

Bring a classic look to your space with this Crystal Blu Classic Two Lever Bar Faucet. Durably crafted from stainless steel, chrome, and copper for dependable strength, this faucet features an all-metal construction for added durability. Its 1.85 GPM water flow, included deck plate, and up to 1" installation openings make it easy to install. A 1/2" NPSM connection ensures reliable water filters, and a ceramic disc value helps to save water. This ADA compliant and lead-free faucet is suitable for both residential and commercial use and is CEC compliant.

Seems like Coke and Pepsi; very similar but stylistically different. And remember, the merchandiser should validate and tweak the descriptions in both cases. This is where the merchandiser can add great value in distinguishing the product.

While I found Open AI’s Gpt-3.5-Turbo quality to be consistent, the response time was slower, and we got “model maxed out” errors quite frequently, even on a paid account. Guess a 1,000 monkeys were at it, stressing the system. Google’s PaLM Chat and Text Bison were very consistently fast. The Chat Bison Model was slightly inconsistent in quality and was petulant at times for classification tasks (category determination, etc).

We recommend Google Vertex AI’s PaLM Text Bison as a cost-effective, high-performance LLM Service for all real-time use cases where a user is waiting and ready for a response. The Chat Bison model, we are hoping, will get less finicky in its 002 avatar. Right now, it’s like a 4-year-old savant. Great at times, but a little hard to work with. This may still be a really good option if you are heavily invested in GCP.

For scheduled jobs where you can try again if the modal fails and where response time isn’t a big concern, we recommend Open AI’s GPT 3.5 Turbo model. It is very cost-efficient and has great quality. We use it even for non-chat one-shot tasks such as product description, category determination, etc., and chat tasks. While you get what you pay for, we cannot in good conscience recommend the legacy Da Vinci Model. You could pay a lot for an antique bottle opener, but a $1 flat opener will open your beer just fine, and the beer still tastes as good.

Amazon’s Titan Model (offered under Bedrock) might work better for AWS customers. Since the service is currently in limited preview, we have not tested it for quality, performance, and price.

Cohere is a strong independent contender that is in collaboration with Oracle. Its performance is great, especially for the purpose-built classification endpoint. Sometimes, you want a reliable provider who your IT thinks isn’t stealing your data and your business thinks isn’t competing for your customers. Cohere is quite neutral in this regard. So, it’s a safe choice, like ordering vegan, nut-free, gluten-free dessert for your guests. It might cost you a little and may not taste as great, but no one will be hurt.

Last but not least, Claude 2 from Antropic is best suited where you need the memory of an elephant. Its impressive ability to handle large contexts of up to 100,000 tokens was a lifesaver for one of our clients. Normally, though, you shouldn’t need such size.

It looks like we are taking the easy way out and recommending every provider, but the truth is that each has its own angle, and it isn’t exactly one size fits all. Moreover, I strongly recommend architecting your solutions so that changing a provider is a flip of a switch or at least very easy to do. With new models being released every day, it’s best to avoid vendor lock-in, both in contracts and in code. You want to keep your options open.

In this article, we saw several ways this technology can assist a Merchandiser, from playing with Legos to buying overpriced bottle openers. We also saw some example implementations, results, and costs from our work at AAXIS Digital. Models from Open AI, Google, Cohere, and Antropic had their own plusses and minuses. Like the wheels that carry your economic engine, they should be built to be easily switched out.

Creative destruction plays a key role in entrepreneurship and economic development. By embracing these and other techniques based on technological advancements in Generative AI, merchandisers can transform their operations, improve productivity, and effectively navigate the dynamic demands of the market.

They can become the ten-handed octopus we need them to be– fast, smart, able to fit into Mason jars, and willing to adapt to the rapidly changing business landscape.

So, if you are ready to 10X your merchandisers, contact AAXIS Digital for suggestions on how to get started!

Artificial Intelligence(AI) is all the rage right now, with claims that it can do everything from reading your pet's mind to ending world hunger, and...

Data cleansing survey responses using OpenAI’s GPT Model

In the first part of the blog series “How to Turn Traditional eCommerce Analytics Into Actionable Insights - Part 1 of 2” the focus was to modernize...